It's AI Against Corona

The Origin

The Coronavirus disease (COVID-19) was first reported from Wuhan, China, on 31 December 2019.

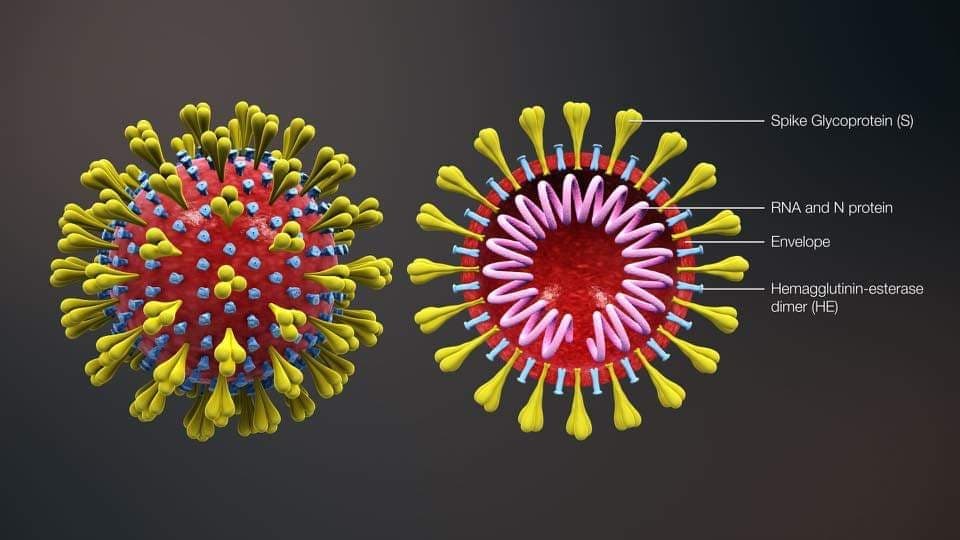

The Naming

The new corona virus is called "2019-nCoV". All corona viruses have a series of crown-like spikes on its surface, hence the name "corona" derived from Latin and meaning "crown" or "wreath".

SARS, Corona?

Often you might hear SARS referred to as Corona. SARS or "Severe Acute Respiratory Syndrome" corona virus 2 (SARS-CoV-2) was the original name of the new virus. The name "SARS" was the choice because the virus is genetically related to the corona virus responsible for the SARS outbreak of 2003. Those viruses are related but still different.

COVID-19 is the disease caused by the new corona virus called 2019-nCoV (2019 novel Corona Virus).

So why COVID-19 and not just SARS?

In 2003 the SARS outbreak affected mostly Asia. To mitigate uncertainty and prevent confusing 2019-nCoV with SARS in the media, WHO is referring to the virus as “the virus responsible for COVID-19” or “the COVID-19 virus”. There is no intention to replace the official name of the virus, namely SARS.

How Long Does 2019-nCoV Survive on Surfaces?

The new corona virus may live up to three days on plastics or stainless steel according to a new study by the CDC. The virus can remain active on paper for up to 24 hours. This is shown by initial experiments by the United States Disease Control Agency (CDC).

Risk Groups

Who exactly is part of this risk group is one of the many details that are currently still being researched. But from what we know so far, 2019-nCoV seems to be similar to other infectious diseases: anyone who is already weakened will not easily be able to cope with the new corona virus.

Smokers also seem to be more at risk.

In general, the elderly among the COVID-19 patients face a higher mortality rate, increasing with age. This can be seen in data from China. Professor Uwe Liebert, director of Virology at Leipzig University Hospital says: "People of age 70 and above are particularly at risk of developing a severe corona virus course of illness". According to data from China, everyone of age 60 or older is at elevated risk when dealing with COVID-19. Most of the deaths in China were among those over 80 years old.

Just another form of influenza?

Stop saying that Corona is "something like the flue". It is not. COVID-19 is more dangerous than influenza. In a typical winter, 10 to 15 percent of the population are infected with the flu virus. Around one percent of these people have to be hospitalized and about one in a thousand people die. Depending on the flu season, the flue death rate is sometimes slightly higher.

COVID-19 has a much more severe course than flu. About 20 percent of all people who tested positive had to be hospitalized, five percent had to be given mechanical ventilation. Experience so far has shown that the disease is fatal in at least one percent of all patients. COVID-19 thus has a death rate that is at least ten times higher than for the common flu.

However, this rate also depends on how well the healthcare system is prepared. If the cases grow rapidly, this can overwhelm the intensive care capacities. Countries that take drastic containment measures on the early stage of the virus spread can expect a lower death rate.

It is not an easy task to see why some countries suffer more from teh corona virus, while the others less. There may be many diverse questions and factors such as: How old is the population? How good is the healthcare system and nursing in general? How quick quarantine measures were employed? Etc...

AI against Corona

Data Science in Epidemiology

The immense complexity of our modern mobility makes reducing virus spread extremely challenging. As an example, the global air traffic network links more than 4.000 airports worldwide through more than 25.000 connections. More than 3 billion passengers are transported every year and together travel more than 14 billion km a day.

In the 14th century Europe the mobility was almost exclusively local as a result back then the "black death" spread from south to north at a speed of approx. 4-5 km per day. Modern epidemics are much faster, covering traveling area between 100-400 km per day.

A crucial tool for the prediction of epidemic paths is highly developed computer simulations that try to predict the virus spread. Such computer simulations are rather complex, requiring precise knowledge and data of viruses transmitted by humans.

Fortunately, there are some concepts which might help to fight virus outbreakes.

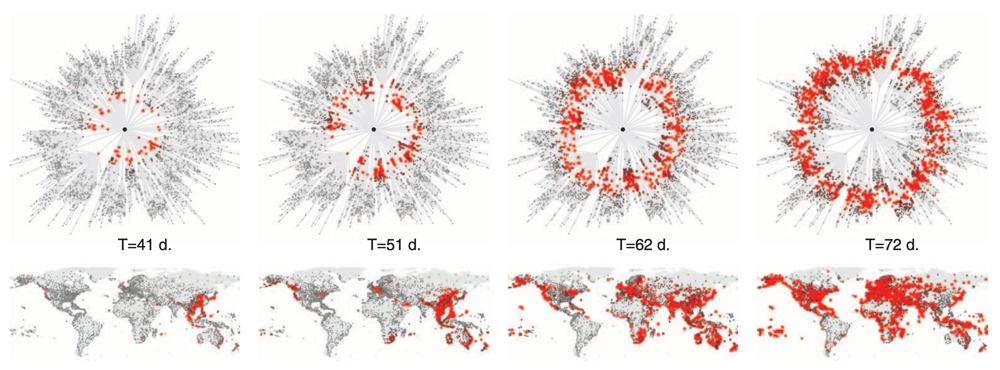

The physicists Dirk Brockmann from Humboldt University Berlin and Dirk Helbing from ETH Zurich have developed an approach for network-driven contagion phenomena. The mathematical theory says that in the highly networked world of the 21st century, the geographical distances are no longer relevant. They must be replaced by the so called "effective distances". "From the perspective of Frankfurt, for example, other metropolises like London, New York and Tokyo are effectively no further away than geographically close places like Bremen, Leipzig or Kiel." so Brockmann, who's referring to three cities in his relatively small home country Germany as an example for close places.

But how do you calculate the effective distances?

Here the researchers show that those distances can be determined directly from the air traveling traffic network. That means, if many people travel from city A to city B, then the effective distance from A to B is small. If only a few people travel from A to B, then the effective distance is large. This is also why flights are canceled and borders or even cities are closed in times of the global corona virus spread.

If we looks at complex geographical epidemic patterns such as, for example, the global spread of SARS in 2003 or influenza A (H1N1) in 2009 in context of the effective distances, then the spread patterns become regular. We see the same circular waves in local infected areas as we narrow down to specific geographical regions. The spread stays circular which is easier to describe mathematically and visualize graphically.

The researchers claim that modern epidemic spread patterns do not appear to be fundamentally different from historical spread patterns, but became more difficult to determine due to the modern mobility impact on the ever more complex spread patterns.

An important aspect of the theory for applications is the fact that the “effective” distance patterns only have a simple geometry if they are visualized assuming the real place of origin for the virus. This means that you can determine the virus origin by calculating the current spread pattern from the perspective of all possible locations. Then we quantify the virus circularity degree. Consequentially, the true place of origin gives the highest results, meaning the data is most clearly spread circularly.

Here is the original paper The Hidden Geometry of Complex, Network-Driven Contagion Phenomena.

Nvidia is calling on gaming PC owners to put their systems to work fighting COVID-19

In case you happened to have a gaming-ready PC with an Nvidia grapics card, you should think about lending some graphical power to help fighting the COVID-19 outbreak.

Nvidia is calling to PC gamers to download the Folding@home application and start sharing their spare clock cycles to promoting world's corona virus knowledge. This program links your computer to an international network which harnesses the distributed processing power to cope with massive computing tasks - something that gaming GPUs are good at. This power is used to compute Machine Learning models to better predict and analyze the new disease.

No worries! Anyone can still turn off the application and take the GPU's full power back at each time desired.

The download of the application is available on foldingathome.org.

Chinese supercomputer uses Artificial Intelligence to diagnose patients from chest scans

The system analyses hundreds of images in seconds. The supercomputer can quickly distinguish between patients infected with the corona virus and those with common pneumonia or another lung diseases. The accuracy of the analysis is at least 80%. The system reports areas of the patient’s lungs that require special attention.

It also provides a likelihood estimate of the person having contracted COVID-19 in a range from zero to ten.

The system's performance improves as the number of samples for training increases. It was and is used to help medical teams fighting the corona virus in more than 30 hospitals in Wuhan and other Chinese cities.

Btw, China has offered free use of the machine around the world.

The Canadian Start Up BlueDot tracks infectious disease outbreakes

BlueDot, a startup based in Toronto uses artificial intelligence, machine learning and big data to predict the outbreak and spread of infectious diseases. On Dec. 20, 2019, the company alerts its customers and clients from the government about a potential cluster of new unusual lung disease cases coming from around a market in Wuhan, China.

Nine days have passed before the WHO alerts people about a novel corona virus danger.

BlueDot is a proprietary software created to locate, track and predict viruses spread. The BlueDot software gathers data on diseases around the world 24 hours a day. This data comes partially from organizations like the Center for Disease Control or the WHO.

However, the bigger part of BlueDot's data comes from the outside of the official health care resources, such as the travelers movements on commercial flights worldwide, human, animal, insect population data, climate change data, information from journalists and healthcare workers, which can be found on the Internet.

BlueDot’s workers classify the data manually, then they filter for relevant keywords and apply natural language processing as well as machine learning statistics to train their systems.

What BlueDot also does, they send out alerts to government, business and public health clients.

With the beginning outbreak of COVID-19, the BlueDot program marked articles in the Chinese language that wrote about 27 new pneumonia cases associated with the Wuhan market. Moreover, BlueDot could analyze the cities that were highly connected to Wuhan. They applied methods like global airline ticketing data to help predicting where the potential infected cases might be traveling.

The following international destinations from Wuhan, that BlueDot predicted, would have the highest amount of travelers: Bangkok, Hong Kong, Tokyo, Phuket, Seoul and Singapore. Just like predicted, these cities at the top of the list had the first corona virus cases outside of China.

What to expect?

In the current corona virus outbreak, we can use artificial intelligence in at least three following ways:

- support in the development of vaccines against COVID-19

- scan quickly through existing drugs to help see if any of them may be repurposed

- help find a medicine to fight both the current and future corona virus outbreaks

Let's still be realistic about what AI might do for us. The timing - likely, the earliest time something like this might be accomplished is 18 to 24 months away. The manufacturing scale-up, the safety testing, etc ... will postpone the results we need.

Where to find data to to work on?

Two datasets were made available by the Johns Hopkins University recently. One containing SARS-COV2 reports of different countries and regions and another dataset containing time-series with confirmed deaths and recoveries. They are available for download in a GitHub repository, which is updated daily.

Other useful links: