Classification with Naive Bayes

Basics from Statistics

Statistics deals with the probability of some event. In statistics an event is a subset of outcomes. If we take a dice and throw it, the events might be:

- the outcome is an even number

- the outcome is less than three

- the outcome is not an uneven number, etc...

Imagine you want to build an e-mails spam filter. So, initially you have some e-mails. What is the probability that an e-mail is HAM (a good one) and not SPAM?

We can find the probability of an e-mail being HAM: P(HAM) - find the prior probability that an e-mail is HAM, given that the context is known.

A prior probability P(A) is a probability of an event A without knowing an event B.

The same with the SPAM e-mails:

Probability Distribution

Probability distribution is a function that outputs some values between 0 and 1. Those values must sum up to 1 in the end.

Conditional Probability

The right question here would be: What is the probability of an event A if the event B is known (condition)?

For example: p(word | HAM) what's the probability of a word occurring given that this word is HAM?

"Conditional" means: what's the probability of one event (A) conditioned on the probability values of another event (B) ∩ P(A|B)?

Distribution

The distribution of a statistical data set gives us a function which depicts some values (data). This function describes frequency of those values. When we deal with an organized distribution of data, values are often ordered from smallest to largest.

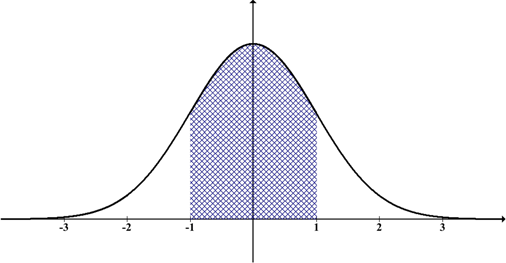

There are many other different kinds of distribution. One of the most common and well-known is called the normal distribution, also known as the Bell-shaped curve. The normal distribution is based on data that is continuous. Most of the data (approx 67%) in the normal distribution are centered around the mean (the middle part) and as you move farther out on either side of the mean, you find fewer and fewer values.

The Mean

The mean (also average) describes some central tendency of the data. It is the one number that represents the whole data set most optimal. It is also can be viewed as the "central number" in the data set.

Joint Distribution

Joint distribution is a dependent distribution when two events happen together:

p(A and B) = p(A ∩ B) intersection of two (or more) events

Notice how the conditional probability equation P(A | B) = P(A ∩ B) / P(B) can be seen as:

P(A ∩ B) = P(A | B) * P(B)

Marginal Distribution

Marginal distribution is an independent distribution. It doesn't reference values of the other variables. So, p(A) is not conditioned on another event. Marginal distribution sums the totals for the probabilities. Those probabilities are found in the table margins (as the name "marginal" says).

Marginal distribution contrasts with conditional distribution because in marginal distribution the variables are independent.

Two events A and B are called to be independent if their joint probability equals the product of their probabilities:

P(A ∩ B) = P(A) * P(B)

Independence of Variables:

The random variables are independent if: p(x,y) = p(x) p(y)

Example: if you trow three dice, the numbers those three dice show are statistically independent to each other.

Conditional vs. Marginal Distributions

Conditional distribution finds probabilities for a subsets of data. If we have two random variables, we want to determine the probability for one random variable, given (with some condition) restrictions for the other random variable.

Marginal distribution is the distribution of one random variable without any condition based on some other random variable.

Bayes Theorem

P(A|B) : posterior: given B, what is the probability of A occurring?

P(B|A) : likelihood (how well the model predicts data)

P(A) : prior probability: a degree how much we originally believed the model before new evidence

P(B) : evidence: chance of getting any positive result

Spam Filtering with Bayes Theorem

Spam filtering is one of the applications of Bayes Theorem.

p(words|spam) : conditional Bag of Words (BOW) probability

p(spam) : prior probability (without knowledge of the other event) that an email is assigned to a category (spam or ham)

p(words) : e-mail's content probability without knowing the category (spam or ham) also BOW probability

With the Bayes Theorem we can predict the chance a message is spam or not given the presence of certain words. Clearly, words like "hot girl" and "fast cash for free" are more probable to appear in spam messages than in not spam ones.

The Bayes Theorem is often referred to as Naive Bayes. Why "naive"? It is called naive because the theorem's assumption is that the measurements don't depend on each other which is almost never the case in real life. But with Naive Bayes if several factors are independent, the probability of seeing them together is the product of their probabilities.

Take the following example : an animal can be considered to be a panda if his/her weight = 85 kg, height = 77 and favorite food = bamboo. Even if these features depend on each other, an assumption of a Naive Bayes classifier sees all of these properties as independent contributors to the probability that this animal is indeed a panda.

There are some disadvantages of Naive Bayes if applied as a classifier in for example a machine learning classification task:

- If a data point belongs to one category in the test set and this same data point is not observed in the training set, then the classifier will output zero probability for this data point. This means because of this zero frequency, the classifier cannot give any prediction. Thus the division by zero must be avoided with the help of other tools like smoothing (e.g. the Laplace Smoothing)

- As already said before, the "naive" part of the name is there because of the assumption of predictor independence: with Naive Bayes we assume that the presence of a class feature is not related to the presence of some other feature, given the class variable. We make assumptions which may or also may not be correct in the end.

Decision Rule

If we have a Binary Naive Bayes text- classificator, how do we predict the right class for a word or text? There is a decision rule for this:

But what if we have more than two categories and we have to deal with multi class classification?

Then the decision rule will be:

- compute the probability for each category as p(text|cat1)*p(cat1), p(text|cat2)*p(cat2), p(text|cat3)*p(cat3), etc ...

- then choose the highest score.

Calculating with Logarithms

When multiplying many small probabilities, the result may quickly go towards 0. So it's better to avoid the multiplication of probabilities. Instead, we can use the sum of the logarithmized probabilities: log(a * b * c *...) = log(a) + log(b) + log(c) + ... For example: if you have to multiply 0.0001 * 0.001 * 0.00001 * 0.01 together, you'll get 0.00000000000001, which is not that handy to work with. Instead you can represent your results in the following way:

log10(0.0001*0.001*0.00001*0.01) =-4+(-3)+(-5)+(-2) = -14

... -14 is easier to handle as the result with lot's of zeros.

Basics of Logarithms:

loga(y * z) = logay + logaz

loga(y / z) = logay - logaz

loga(1 / y) = loga1 - logay = 0 - logay = - logay

loga z b = b * loga * z

loga a b = b

loga a = 1

Now we can adjust our Decision Rule from above to Logarithms:

Decision Rule with Logarithms

log P(HAM|text) > log P(SPAM|text)

or, following the Decision Rule from above:

log P(HAM|text) - log P(SPAM|text) > 0

Note: If a log has no base written, you assume that the base is default 10

Summary

Naive Bayes can be used for different classification tasks. It is called naive because of the assumption that all the variables are independent which almost never happens in real live.

The theorem measures how much we can trust the evidence.

Naive Bayes is often used in tasks like: fast multi class prediction, text classification (spam filtering, sentiment analysis), credit scoring and recommender systems.

A Bayesian Neural Network can be very convenient, when we want to quantify some uncertainty. Bayesian neural networks can help in preventing overfitting.

Further recommended readings: